Scaling Decentralised Infrastructure

OnFinality provides reliable blockchain infrastructure including an automated API service and a dedicated node service for web3 developers building on Polkadot.

Find out how we scale our mission-critical infrastructure to over 150 million daily API requests

OnFinality mission is to support network and application developers to build the future by providing mission-critical tools and infrastructure services. By far our most popular developer tool is our Enhanced API service.

Trusted by the biggest Polkadot networks and used by some of the largest applications on Polkadot, our Enhanced API Service allows you to access networks in minutes without ever worrying about infrastructure.

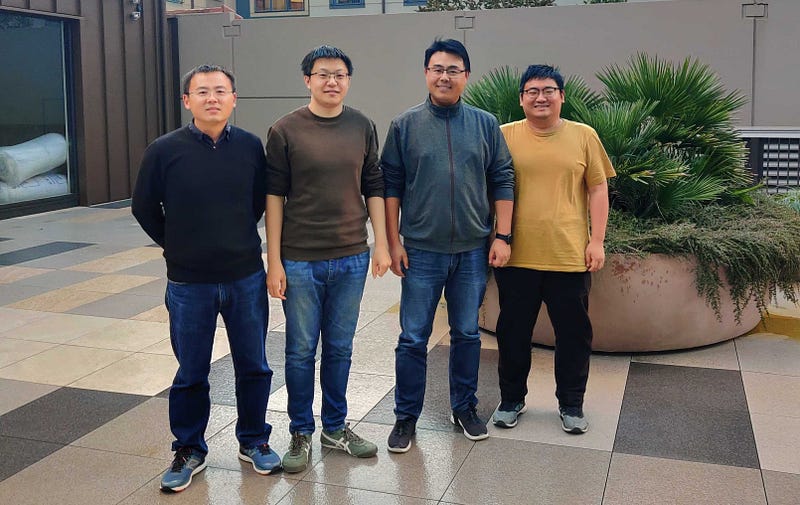

Today we’re going to talk about the architecture behind our API service, and some of the innovations that have allowed us to become one of the largest blockchain infrastructure providers in the world (even with only 4 full time members in our technical team). We’ll also explain why we’re currently only on Amazon Web Services (AWS) and Google Cloud Platform (GCP), over other smaller (and potentially cheaper) competitors.

OnFinality focuses on Substrate/Polkadot networks, and to date our busiest single day included over 157 million requests. This scale is incredible, roughly the same throughput as the Visa transaction network with over 1,800 queries per second.

Our entire operating approach has the following three factors held as the highest priority:

- Global Performance

- Automated Scalability

- High Reliability

Global Performance

What this means for us is that wherever you are; whether in Auckland with us, in the jungles of Mozambique, or on the beach in the Bahamas (we wish!), you (and your app’s users) are going to receive the best performance possible.

Latency is a big factor in our considerations. Take for example, a request from where I am in Auckland to one of our clusters in Tokyo. The complete request time is 800ms, of which only 70ms is the time that our clusters are working on your request. The majority of the time (~90% for me) is round trip latency.

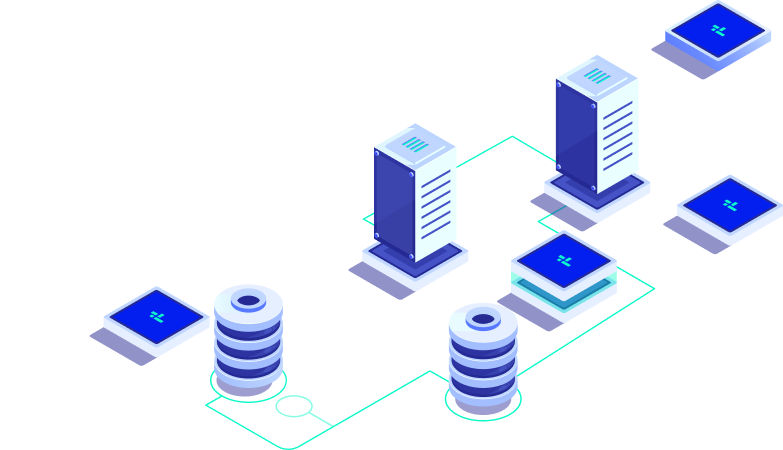

That’s why we’ve focused on building intelligent geographic routing. It’s a new and continuously improving feature that automatically routes requests to the closest available node. Additionally, it allows us to redirect all requests immediately to a backup or to the alternative pool when any cluster falls off service, providing us with a fault tolerant system in the case of regional outage.

In the future it means that we’ll be creating more and more smaller clusters tactically placed closer to our users. One of the benefits of the larger cloud providers is that they have a larger network with more distributed data centres closer to our users, something that smaller and cheaper cloud providers don’t offer.

Additionally we are working on smarter caching layers and the addition of lighter nodes to reduce the number of requests that need to go to our array of full archive nodes.

Automated Scalability

We’ve built our service to scale with our customers, up to any size without any errors and performance degradation. This means at times of extreme stress (e.g. Parity’s outage late April), we’ve been able to automatically (and in a short space of time) provision new compute resources and scale our systems to cope with unprecedented demand.

We have only 4 full time members in our technical team at OnFinality, so we’ve done all this in an incredibly lean way. It’s important to realise that we build our API service (and our dedicated node service) to be deployed and managed automatically from day one.

Some might make the argument that running dedicated compute resources, bare metal instances, or using a smaller provider might save costs. But when managing over 47 individual nodes (at the time of writing) during normal traffic for our API services in multiple regions around the world, management simplicity is the major concern for us.

Part of the solution here is a heavy reliance on Kubernetes and Terraform scripts that smaller providers don’t include in their platform or Kubernetes tooling. Additionally, we go with cloud providers that allow us to double our significant compute resources in minutes to react to daily demand spikes.

High Reliability

It is our goal this year to hit 99.9% uptime for our services (excluding downtime caused by network or node image issues). In real terms, that means a maximum downtime of less than 9 hours each year — one night’s worth of downtime and we’ve missed our goal.

To hit this goal, and so our customers can be confident in our systems and service, we are building fault tolerant systems and only using cloud providers that we know that support this. We want to leverage the systems of larger providers (like Amazon and Google) to manage the lower level routing, network, and resource allocation tasks so we can focus on the harder things bespoke to our domain. Going for a custom implementation, or even a bare metal server, will introduce significant risks in certain areas that we cannot accept.

In summary, at our current scale and with the goals we have (99.9% uptime) we cannot afford to go with smaller and potentially cheaper cloud providers. We also need to keep in mind our three goals; global performance, automated scalability, and high reliability when building and managing our systems with our small team.

Cost is absolutely an issue for us, and we’re keeping close watch on the exact $ of cloud spend per thousand API requests as a primary metric. We’re exploring many different ways to reduce this including alternative cloud providers or deployment structures.

We’re aiming to grow with Polkadot to 1 Billion daily API requests, and our focus remains on building scalable, reliable, and distributed systems to remain the fastest Substrate infrastructure service.